Course Project

Addiction Simulation

Developing a simulation to visualize the pathway and process of addiction to an opioid stimulant.

Role

Developer

Timeline

4 weeks

Skills

Python

Machine Learning

Tools

CoLab

Team

Jay Madan

Colette Lee

Purpose

What is Addiction?

Addiction is a chronic disorder characterized by the dysregulation of the brain's reward and control systems, leading to compulsive behaviors despite negative consequences. It primarily involves the prefrontal cortex, ventral tegmental area, and nucleus accumbens, and results from a mix of neurobiological, psychological, and environmental factors.

Our focus is on the progression of addiction over time, specifically targeting heroin, an opioid that rapidly induces dopamine release by binding to μ-opioid receptors in the ventral tegmental area. This dopamine surge in the nucleus accumbens creates feelings of pleasure and euphoria, reinforcing drug-seeking behavior through conditioned associations via the mesolimbic reward system.

Chronic heroin use leads to dependence, tolerance, and significant brain changes, establishing a new "set point" for pleasure and driving ongoing addictive behavior. Understanding these mechanisms is crucial for developing effective treatments and interventions, as addiction imposes severe personal, societal, and economic burdens.

Scope

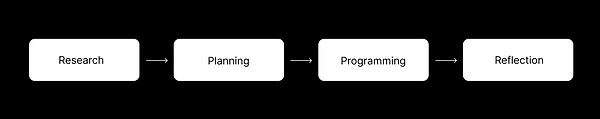

Our Process

Define a Non-Addicted Model

Develop an Addicted Model

Analyze Addiction Over Time

-

Establish how a baseline (set-point) operates when exposed to a less addictive stimulus.

-

Analyze the model's responses and behaviors across varying degrees of exploration to understand normal functioning.

-

Enhance the non-addicted model to simulate addiction by introducing factors that alter its baseline behavior.

-

Examine how the addicted model behaves differently from the non-addicted model at different exploration levels.

-

Investigate how the addicted model selects new rewarding stimuli despite the absence of immediate rewards.

-

Assess the probability of relapse within the model, understanding the triggers and conditions that lead to renewed addictive behavior - withdrawal + relapse.

Implementation

Creating the Model

We use the epsilon-greedy agent as the foundation for our reinforcement learning models, implementing a 4-Arm Bandit Task to define the experimental environment. Each arm in the task returns a reward value R with a probability p. Our non-addicted, addicted, and relapse models all utilize reinforcement learning, updating the reward prediction Q with the formula:

Q ← Q + α × (R − Q)

Here, α is the learning rate that determines the significance of each update, while Q represents the estimated average reward and (R−Q) is the reward prediction error. This update mechanism facilitates learning within both addicted and non-addicted models under the bandit task framework.

For each model, we deploy four agents with decreasing epsilon values [0.1,0.075,0.05,0.02] to simulate different exploration-exploitation behaviors. Lower epsilon values mimic highly addictive personalities by favoring exploitation over exploration. Although addiction is influenced by various factors, manipulating epsilon provides a straightforward method to model different predispositions to addiction.

Results

Outcomes of the Model

The models illustrate how addiction modifies reward perception and decision-making over time for individuals. They depict the transition from initially exploring multiple options (via the 4-arm setup) to exhibiting behavior akin to "craving," where focus narrows to a single reward source despite diminishing actual rewards. This progression mirrors the neurobiological changes that underpin dependency, such as reduced receptor sensitivity from prolonged substance exposure. The models parallel how addicted individuals increasingly prioritize the substance and its perceived rewards over other rewarding activities.

Limitations

A key limitation of our model is its simplicity, as it condenses the complex neurobiological processes involved in addiction. For instance, while the reward probability varies, the reward value remains binary (0 or 1). Although we use epsilon values to represent different levels of exploitative and explorative behavior, the model does not account for numerous environmental factors like substance predisposition, family history, age, and other psychological influences. Furthermore, individual responses to drug use and addiction processes are heterogeneous, and our model does not capture the nuanced spectrum between "addicted" and "not addicted."

Implications

The model offers insights into the behavioral and neurobiological changes associated with addiction, aiding in the understanding of addiction mechanisms in general or specific to particular drugs. It can inform the development of interventions for individuals or their support networks to recognize and address addictive behaviors early on. As the model highlights the progressive narrowing of reward perception, it highlights the importance of timely intervention. Future enhancements could involve tuning variables to reflect the neurochemical effects of drugs at a molecular level, allowing the simulation of their impact on reward perception more accurately. Additionally, the model can serve as a baseline for identifying common addiction patterns, irrespective of the specific substance involved.